Brands That Integrated AI in 2025: The AI Bots are Now Everywhere

Updated on

Published on

Some years change what marketing looks like. 2025 changed what products are. The wave of brands that integrated AI got so big that brands that now use AI include your search engine, your shopping app, your design tools, and even the way you plan a trip. The real tell is how quickly brands with AI agents moved from “help” to “do”, and how fast brands that integrated AI started shipping summaries, overviews, and next steps as the new interface. And if you’re noticing brands with AI agents everywhere, you’re not imagining it, brands that now use AI are quietly training us to expect instant understanding on demand.

At-a-Glance

- AI summaries became the new “front page” for information, replacing endless scrolling with instant compression. (Google)

- AI shopping assistants shifted ecommerce from search bars to conversation and recommendations. (Amazon) (Walmart)

- Social platforms leaned into transparency and control, using AI to explain and tune what you see. (Wired)

- Creative tools stopped being software and started acting like collaborators you can talk to. (Adobe) (Canva)

- “Agentic” became the new ambition across business platforms: not just answers, but actions inside workflows. (Salesforce) (ServiceNow)

How These 2025 AI Rollouts Made the Cut

- Picked launches where AI is a real user-facing feature, not a vague “we’re exploring AI” line.

- Leaned on primary announcements first, then used credible reporting to confirm what actually shipped.

- Focused on AI agents, summaries, chatbots, and overviews people can touch in an app, not internal experiments.

1) Google: AI Overviews, AI Mode, and agentic Search

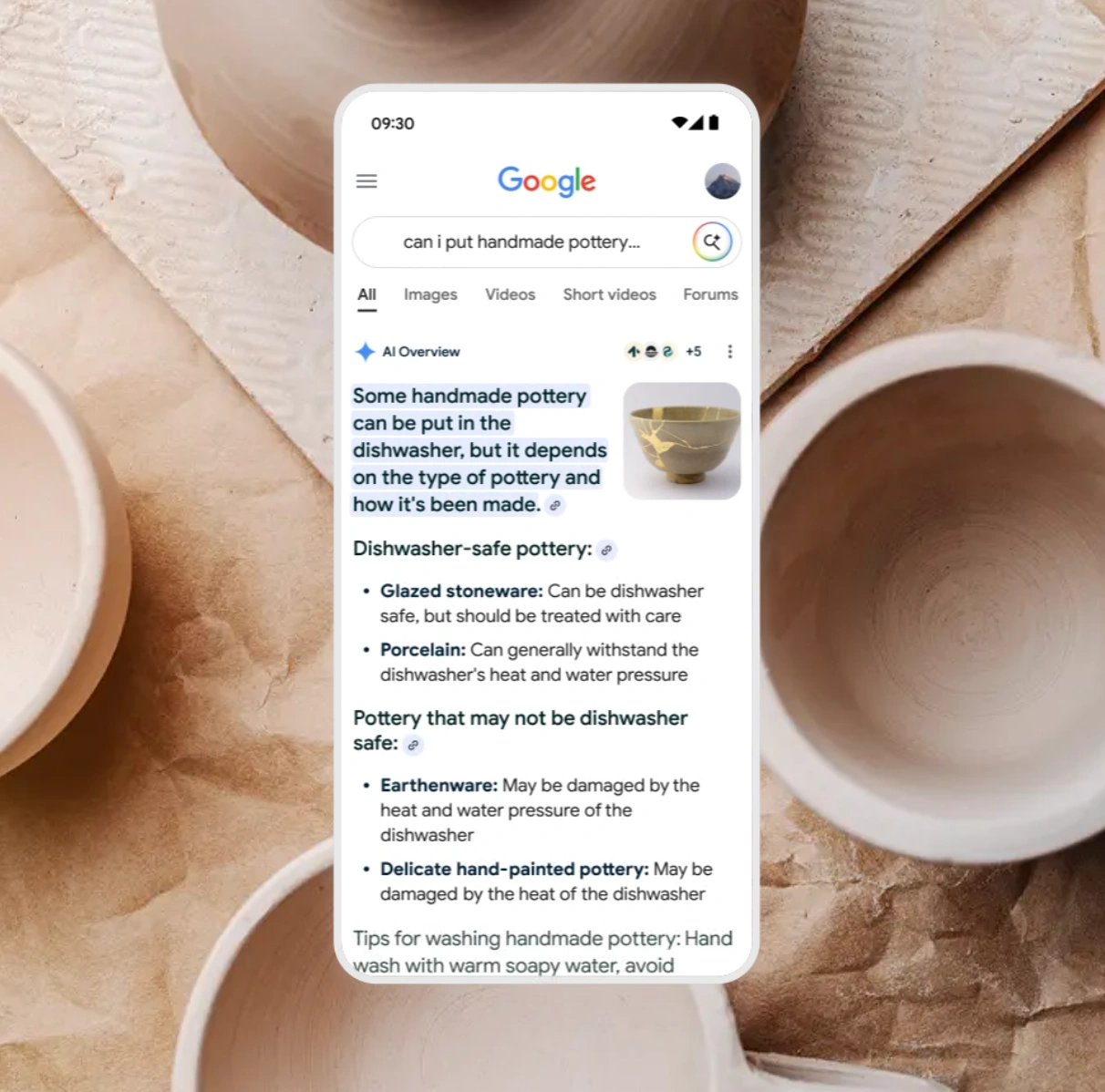

Google didn’t just bolt AI onto Search in 2025, it rebuilt the experience around it. AI Overviews pushed Search toward synthesized answers with links, and Google framed it as a major shift in how people ask longer, more complex questions. Then AI Mode moved that experience into a dedicated tab, designed for deeper reasoning, follow-ups, and multimodal inputs. The direction is clear: Search is becoming a thinking partner, and in some cases, an execution layer that can help complete tasks. (Google)

- Google said AI Overviews drove over a 10% increase in usage for query types that show AI Overviews in big markets like the U.S. and India. (Google)

- AI Mode rolled out in the U.S. in 2025, with features like Deep Search and agentic capabilities discussed as part of the roadmap. (Google)

- Ads followed the interface shift too, with Google highlighting Ads in AI Overviews expanding to desktop and more countries in 2025. (Google)

2) Google Discover and News: AI summaries as the feed format

Scrolling used to be the default, then summaries showed up and rewired the habit. In 2025, Google rolled out AI summaries in Discover, changing what people see first from a single headline to an AI-written synthesis that cites multiple sources. Later, Google tested AI-powered article overviews on select Google News pages, pushing the same idea deeper into publisher environments. The cultural meaning is uncomfortable and obvious: attention is collapsing, and platforms are racing to compress information into something frictionless. (TechCrunch)

- AI summaries in Discover were described as rolling out in July 2025. (TechCrunch)

- Google tested AI article overviews on select Google News pages in December 2025. (TechCrunch)

- The product bet: people want “the point” first, and links second, even if that changes the publisher relationship. (TechCrunch)

-1.webp)

3) Meta: a dedicated Meta AI app

Meta’s 2025 move was to treat its assistant like a product, not a sidebar. The Meta AI app gave people a standalone place to access the assistant, instead of only encountering it inside social flows. That matters because it positions Meta as an “AI companion” brand, not just a social network company, which is a huge brand identity shift. It also reflects a broader reality: assistants are becoming a new distribution channel, and platforms want to own that doorway. (Meta)

- Meta introduced the Meta AI app on April 29, 2025. (Meta)

- The product framing: a new way to access your AI assistant, separated from the feed. (Meta)

- The marketing implication: assistant ownership is becoming as strategic as platform ownership. (Meta)

4) Instagram: Your Algorithm turns AI into transparency

Instagram’s new Your Algorithm feature is fascinating because it uses AI to explain you to yourself. It shows an AI-generated summary of what you’ve been “into,” then lets you add or remove topics shaping your Reels recommendations. That’s not just a control panel, it’s a trust play, because people are tired of feeds that feel random or manipulative. It also signals where social is heading: less mystery, more knobs you can turn. (The Verge) (Wired)

- Your Algorithm rolled out starting in the U.S. on December 10, 2025, with broader rollout described as coming soon. (The Verge)

- Wired described the interests summary as AI-generated, based on activity. (Wired)

- The social signal: personalization is no longer enough, people want legibility and control. (The Verge)

5) Apple: Apple Intelligence expands, and summaries become a system feature

Apple’s 2025 AI story wasn’t “look what AI can do,” it was “AI, but with Apple vibes.” Apple Intelligence expanded to many new languages and regions with iOS 18.4, iPadOS 18.4, and macOS Sequoia 15.4, pushing features like writing help and summarization into everyday defaults. This matters because Apple makes AI feel like infrastructure, not an app you open, which raises expectations across the entire market. When the phone itself starts summarizing and assisting, every other brand’s “AI feature” suddenly has to justify itself. (Apple)

- Apple announced the March 31, 2025 expansion tied to iOS 18.4 and related releases. (Apple)

- Apple’s support docs frame Apple Intelligence as a set of system-level features available on supported devices and versions. (Apple Support)

- The cultural meaning: AI is becoming ambient, and “no AI” is turning into a deliberate choice. (Apple)

6) Microsoft: Copilot Vision and Copilot Actions push beyond chat

Microsoft spent 2025 trying to make Copilot feel like a helper you can actually use in motion. Copilot Vision brought camera-based assistance to mobile, turning the assistant into something you can show the world to, not just type about. Then Copilot Actions pushed the idea further by letting Copilot complete tasks on the web with launch partners across travel and reservations. The societal shift is obvious: we’re moving from “tell me” to “do it,” and brands that integrated AI are racing to be the one you delegate to. (Microsoft) (The Verge)

- Microsoft said Copilot Vision on mobile became available to try for free in the U.S. with rollout plans described in June 2025 release notes. (Microsoft)

- The Verge reported Copilot Actions and listed travel and booking partners like Booking.com, Expedia, Tripadvisor, and OpenTable. (The Verge)

- The product bet: assistants win when they save effort, not when they sound smart. (The Verge)

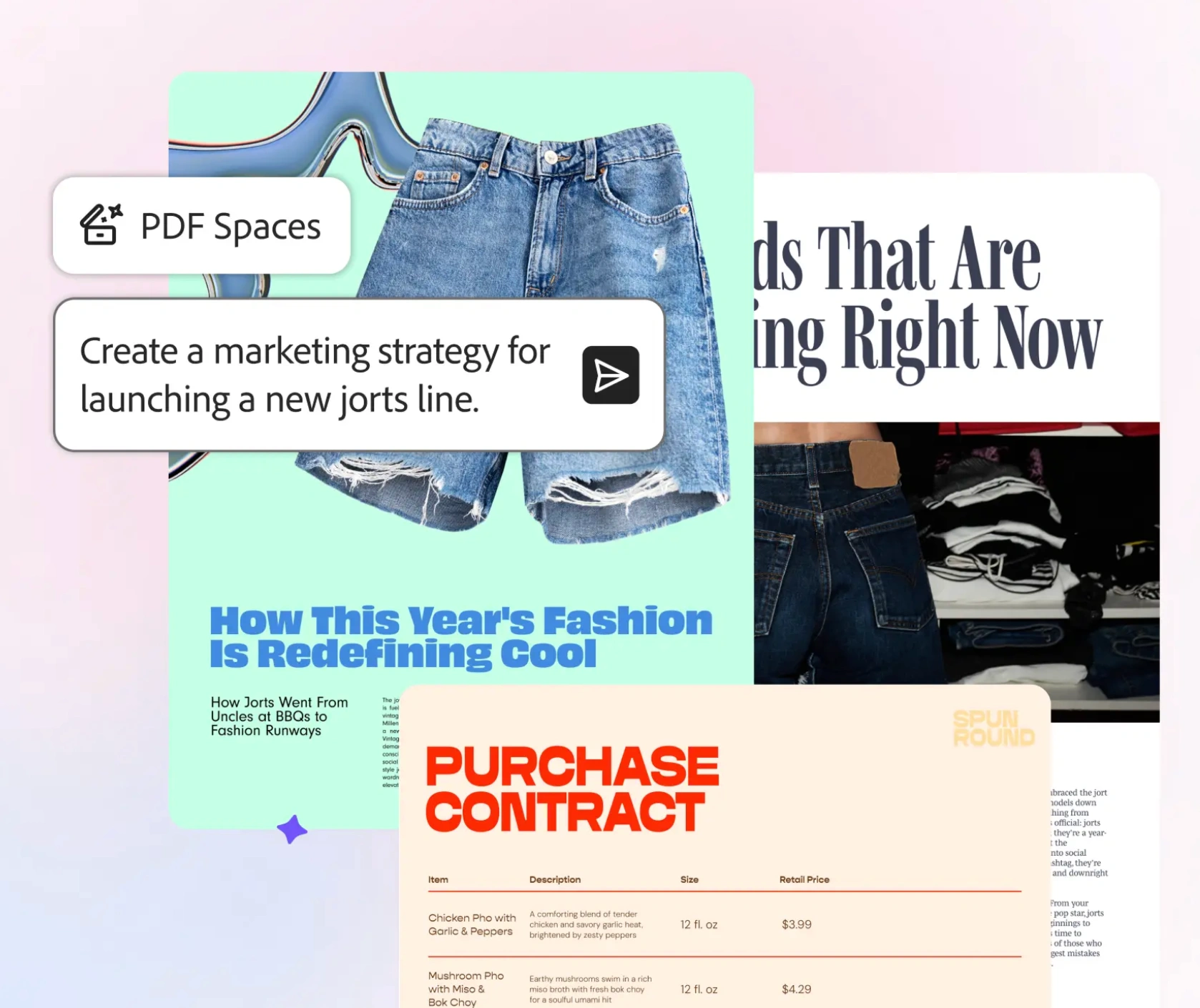

7) Adobe: from AI assistant to “apps inside ChatGPT”

Adobe’s 2025 strategy reads like a two-step: make creation conversational, then bring it to wherever conversation already lives. Adobe introduced an AI Assistant in Adobe Express, leaning into chat-style creation and integrations. Then in December, Adobe launched Photoshop, Express, and Acrobat for ChatGPT, so ChatGPT users could invoke Adobe tools through prompts. That’s a huge distribution move, because it treats ChatGPT as a front door for creative work instead of a competitor. (Adobe)

- Adobe announced an AI Assistant in Adobe Express in October 2025. (Adobe)

- Adobe announced Photoshop, Express, and Acrobat for ChatGPT on December 10, 2025. (Adobe)

- Reuters described the integration as enabling image editing, design, and PDF tasks through ChatGPT’s interface. (Reuters)

8) Canva: Canva AI, plus assistants that can operate your designs

Canva’s 2025 launches made one thing clear: design platforms want to become productivity platforms, and AI is the bridge. Canva introduced Canva AI as a conversational assistant at Canva Create 2025, aiming to generate and transform creative work from prompts. Later, Canva’s ecosystem approach showed up in integrations like Claude connecting to Canva, letting users create and edit designs through chat. The deeper story is that tools are becoming “API-able” to assistants, and brands that now use AI are starting to compete on how connectable their workflows are. (Canva) (The Verge)

- Canva introduced Canva AI at Canva Create 2025 as part of a suite of AI-powered tools and upgrades. (Canva)

- The Verge reported Claude’s Canva integration launching in July 2025 via Canva’s MCP server. (The Verge)

- The creative shift: the interface is moving from menus to conversation, especially for non-designers. (Canva)

.webp)

9) Duolingo: AI video calls with Lily become language practice

Duolingo made AI feel like a character you already know, which is a marketing masterstroke. In 2025, Duolingo launched AI-powered Video Call for Android, letting Max subscribers have conversations with Lily. That takes “practice” from tapping answers into actually speaking, which is where most language apps get real and scary. It also shows why brands with AI agents are winning: they’re turning anxiety into guidance, and guidance into habit. (Duolingo)

- Duolingo announced Video Call for Android on January 16, 2025. (Duolingo)

- The feature is positioned as spontaneous, realistic conversation practice with an AI-powered character. (Duolingo)

- The bigger meaning: AI isn’t only automating work, it’s also simulating social interaction for skill-building. (Duolingo)

10) Amazon: Rufus evolves into a shopping agent, and Alexa+ goes web-first

Amazon’s AI story in 2025 wasn’t subtle, it was embedded in the act of buying. Rufus expanded into more personalized shopping help, with Amazon describing features like finding deals, adding items to cart, and even auto-buy at a set price. Meanwhile, Alexa+ showed up as a web chatbot experience for some users at Alexa.com, reinforcing the idea that assistants need keyboards too, not just voices. This is the retail future Amazon wants: fewer filters, more delegation, and a shopping flow that feels like chatting with a helpful clerk.

- Amazon described Rufus gaining capabilities like auto-buy at a set price and cart actions. (Amazon)

- The Alexa Plus website had become live for some users, with a chatbot-style interface and productivity hooks. (The Verge)

- The social signal: convenience is winning, but only when it feels trustworthy and reversible.

11) Walmart: Sparky makes shopping feel like planning, not searching

Walmart’s Sparky is a perfect example of brands that integrated AI to remove friction that used to feel “normal.” Sparky synthesizes reviews, suggests items for occasions, and helps customers plan and compare purchases. Walmart framed it as agentic, with capabilities expected to expand into multimodal inputs and more actions over time. If Amazon’s play is “delegate your shopping,” Walmart’s play is “let’s make choosing feel easier than thinking.” (Walmart)

- Walmart announced Sparky on June 6, 2025 and described review synthesis and occasion-based recommendations. (Walmart)

- Retail Dive reported Sparky summarizing reviews and helping shoppers plan purchases. (Retail Dive)

- The societal meaning: people don’t want more options, they want fewer regrets. (Walmart)

12) Albertsons: agentic AI in the grocery aisle

Grocery might be the most underrated AI battleground, because it’s where routines live. Albertsons launched an agentic AI shopping assistant, reflecting how even practical categories are shifting toward conversational help. The appeal is obvious: groceries are repetitive, decision-heavy, and easy to personalize. When brands with AI agents enter essentials, AI stops feeling like tech and starts feeling like habit.

- Albertsons launched an agentic AI shopping assistant in late 2025 reporting. (Customer Experience Dive)

- The strategic shift: food retail is moving from “search and add” to “ask and decide.” (Customer Experience Dive)

- The behavior bet: repetition is the perfect training ground for personalization.

13) Shopify: Sidekick turns merchant tools into a conversation

Shopify’s Sidekick matters because it’s AI aimed at the person running the business, not the consumer. In 2025, Shopify described Sidekick leveling up into a more capable partner, and reporting pointed to improved reasoning across multiple data sources. That’s the agent shift in a sentence: not “here’s your dashboard,” but “here’s what’s happening and what to do next.” And it’s a reminder that brands that now use AI aren’t only chasing customers, they’re also chasing operator efficiency. (Shopify)

- Shopify positioned Sidekick as evolving from assistant to “business partner” in 2025. (Shopify)

- Digital Commerce 360 described Sidekick analyzing multiple data sources and suggesting strategic recommendations. (Digital Commerce 360)

- The bigger story: merchant tools are becoming decision tools, not just admin tools.

14) Klaviyo: AI shopping agents as a feature any brand can deploy

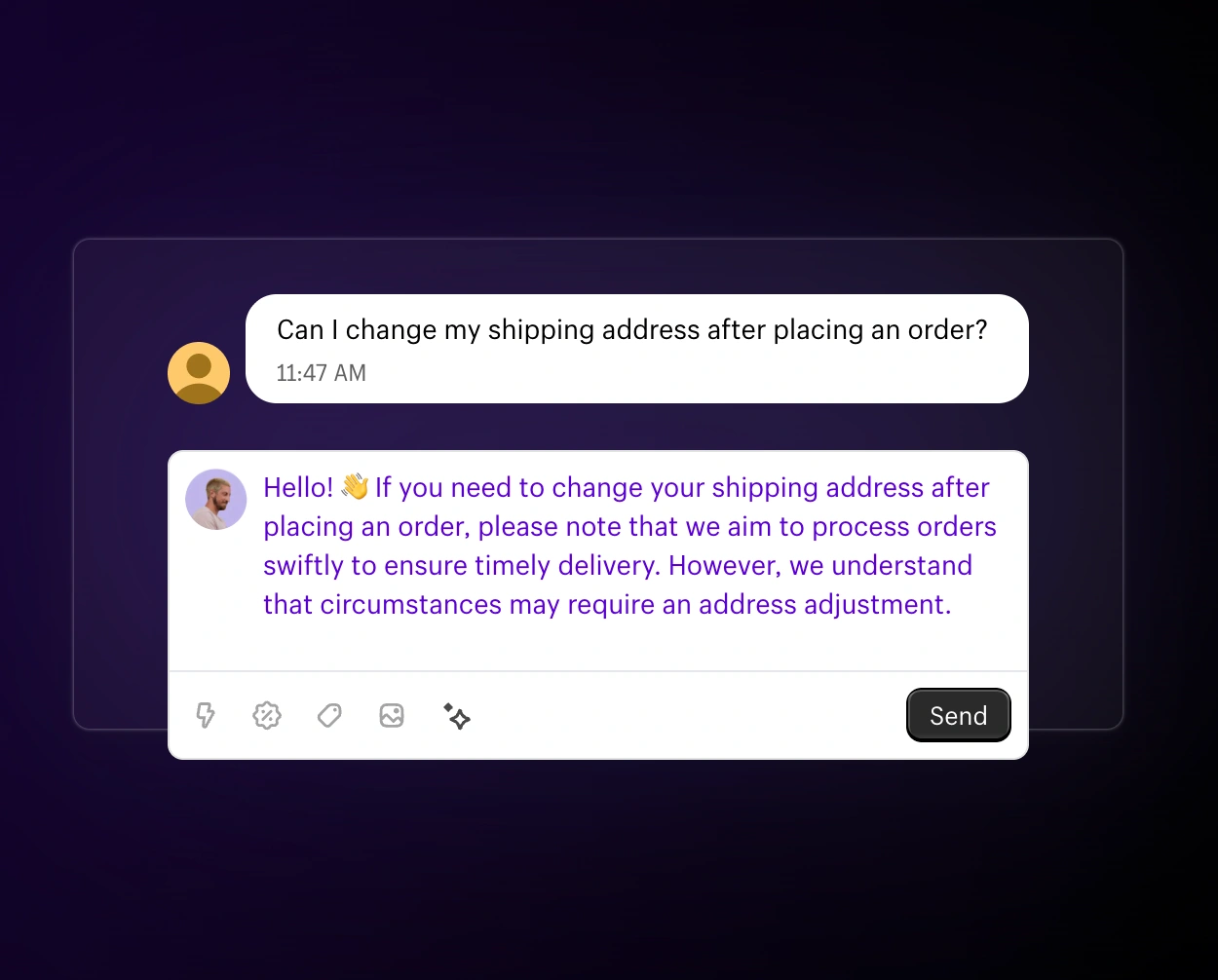

Klaviyo’s 2025 launch is a clue about the next phase: AI agents aren’t staying exclusive to the biggest retailers. Klaviyo introduced an AI shopping assistant positioned as a conversational agent trained on a brand’s storefront, catalog, and FAQs. The pitch is simple: always-on help, personalized recommendations, and fewer abandoned carts. When platforms productize agents like this, “brands with AI agents” stops being a flex and becomes table stakes. (Business Wire)

- The product framing: personalized, always-on support trained on real storefront data.

- The market signal: “agentic” is being packaged for the mid-market, not just enterprises.

15) Yelp: conversational local discovery and AI-powered business calls

Yelp’s 2025 AI expansion is about making local search feel like a dialogue instead of a directory. Yelp described expanding AI features to make discovery more conversational and visual, centered on Yelp Assistant. On the business side, Yelp Host and Yelp Receptionist use AI to answer and triage calls, take messages, and summarize them. It’s a reminder that AI isn’t only showing up in feeds, it’s moving into the unglamorous but crucial layer of real-world service. (Business Wire)

- Yelp positioned Yelp Assistant as part of a more conversational discovery experience in October 2025.

- Yelp Host and Yelp Receptionist use AI to handle calls and summarize messages.

- The societal tell: people want instant answers, but they still want a human experience on arrival.

.webp)

16) Expedia: AI trip planning moves into Instagram and partner tools

Travel planning is basically research pain disguised as excitement, so it’s no surprise AI moved in fast. Expedia launched Trip Matching, integrating real-time AI-powered travel planning directly into Instagram, which is a huge clue about where discovery lives now. On the B2B side, Expedia also announced an AI-powered trip planner and related APIs for partners. It’s the same pattern everywhere: platforms want to turn browsing into decisions, and decisions into bookings, with less friction. (Expedia)

- Expedia said Trip Matching integrates AI-powered travel planning into Instagram in real time. (Expedia)

- Expedia Group announced a new AI-powered trip planner for B2B partners in October 2025.

- The culture shift: inspiration and planning are collapsing into a single tap.

17) Booking.com: agentic customer communication and AI at scale

Booking.com’s 2025 AI push highlights a quieter but massive use case: messaging. Booking.com announced customer-facing agentic AI innovations like Smart Messenger and Auto-Reply to streamline partner-to-guest communication. OpenAI also showcased Booking.com as an example of launching multiple AI-powered solutions to make travel planning more intuitive. That pairing matters because it shows how legacy categories are evolving, not by reinventing travel, but by reducing the tiny frictions that ruin it. (Booking.com) (OpenAI)

- Booking.com announced Smart Messenger and Auto-Reply on October 9, 2025. (Booking.com)

- The larger signal: service moments are being automated because patience is shrinking.

18) Salesforce: Agentforce 3 makes “AI agents” an enterprise product category

Salesforce’s Agentforce story is the enterprise version of what consumers experienced all year: assistants are becoming operators. Agentforce 3 focused on visibility and control, including Command Center for observing and managing agents, which is basically governance as a product feature. Reuters also reported on Agentforce 360 integrating across Salesforce tools to automate routine tasks, showing how seriously Salesforce is pushing agentic AI as a platform identity. The big societal takeaway is that companies aren’t only buying AI, they’re buying permission to let AI act inside critical systems. (Salesforce)

- Salesforce announced Agentforce 3 on June 23, 2025 and positioned it around visibility and control for scaling agents. (Salesforce)

- Reuters reported Salesforce’s Agentforce 360 integrated across its cloud tools and had thousands of clients. (Reuters)

- The trust reality: enterprise AI only scales when auditability and oversight scale too.

19) ServiceNow: agentic AI innovations built for autonomous resolution

ServiceNow leaned hard into “agentic” in 2025, framing AI as something that can autonomously solve complex enterprise challenges. ServiceNow announced AI Agent Orchestrator and AI Agent Studio, with availability timelines laid out for 2025. What’s interesting is how this mirrors consumer behavior: people want problems solved, not interfaces navigated. When brands that integrated AI bring autonomy into enterprise workflows, they’re really selling time and reduced cognitive load. (ServiceNow)

- ServiceNow announced agentic AI innovations on January 29, 2025, including Agent Orchestrator and Agent Studio.

- The release described availability for customers on the ServiceNow Platform in March 2025.

- The strategic bet: autonomy is the next competitive advantage after automation.

20) Zendesk: AI agents designed to resolve most support issues

Zendesk’s 2025 push is one of the clearest examples of AI being sold as outcome, not novelty. Zendesk announced new AI capabilities in October 2025, emphasizing AI agents and smarter automation. TechCrunch highlighted Zendesk’s claim that its new autonomous support agent could solve a large share of support issues without human intervention, alongside other agent layers. Whether every percentage point holds up or not, the message is unmistakable: customer service is becoming an AI-first battleground. (Zendesk) (TechCrunch)

- Zendesk’s press release on October 8, 2025 framed new AI capabilities inside its platform. (Zendesk)

- TechCrunch reported Zendesk’s claim about solving 80% of support issues with an autonomous agent, supported by additional agent types. (TechCrunch)

- The culture shift: people expect instant resolution, and waiting now feels like failure.

21) Intuit: Intuit Assist and AI agents inside QuickBooks

Intuit’s AI push shows how quickly “assistant” became the default promise in finance tools. Intuit positions Intuit Assist as a generative AI-powered financial assistant designed to give recommendations and reduce busywork. QuickBooks also published 2025 updates framed around AI agents and productivity improvements for businesses. The human truth underneath it is simple: money admin is stressful, and brands that now use AI are competing to remove that stress first. (Intuit) (QuickBooks)

- Intuit describes Intuit Assist as a generative AI-powered financial assistant. (Intuit)

- QuickBooks highlighted AI agents and new AI-driven tools in a November 12, 2025 product update. (QuickBooks)

- The deeper pattern: AI is being positioned as “less admin, more confidence.” (Intuit)

What This Explosion of AI Features Says About Society

The rush of brands that integrated AI in 2025 wasn’t only a technology story, it was a pressure story. People are overloaded, so summaries and overviews are the new coping mechanism, and brands with AI agents are the new shortcut for decisions we don’t want to think through. At the same time, trust got more fragile, which is why transparency tools like Your Algorithm matter, and why governance shows up so heavily in enterprise agent platforms. The weird paradox is that we’re delegating more, while demanding more control, and that tension is shaping what brands that now use AI have to design around.

- Compression: AI summaries exist because attention is scarce, not because curiosity disappeared.

- Delegation: agents are winning because time feels more expensive than money in many moments.

- Control: the next differentiator is trust, not cleverness, especially as assistants act on our behalf.

FAQ

What counts as brands that integrated AI in 2025?

It’s the moment AI becomes a product feature customers can actually use, like AI overviews in search, AI summaries in a feed, a shopping assistant in an app, or an AI agent that can take action in a workflow.

Are brands with AI agents the same thing as chatbots?

Not anymore. A chatbot answers questions, while an AI agent is increasingly designed to complete tasks, follow rules, and operate across systems, like booking, purchasing, or resolving a support issue. That “action layer” is why the term agentic shows up so much in 2025 releases.

Why do so many brands that now use AI ship summaries first?

Because summaries reduce effort instantly. They make a feed, a search result, or a product page feel easier to understand, which creates a fast “this app gets me” sensation. That’s why AI summaries showed up across search and discovery experiences in 2025.

Which 2025 AI launches changed shopping the most?

Rufus and Sparky are two of the clearest consumer-facing shifts because they move shopping from filtering to conversation, with features like review synthesis, recommendations, and cart actions. The broader trend is that retailers want to become decision assistants, not just marketplaces.

What’s the biggest risk as more brands integrate AI?

Trust erosion. When AI summarizes, recommends, or acts, mistakes feel more personal, and the cost of confusion rises fast. That’s why transparency controls, observability, and governance are showing up alongside the most ambitious agent rollouts.

The Real 2025 Shift: AI Became the Interface

2025 wasn’t the year AI got invented. It was the year brands that integrated AI made it unavoidable. The brands that now use AI are teaching customers to expect a summary, a recommendation, and a next step, and the brands with AI agents are pushing the expectation even further into delegation and action. The next advantage won’t be “we have AI,” it’ll be “our AI feels safe, clear, and genuinely helpful.” And once that becomes the standard, what happens to brands that don’t meet it?